The Energy Impact of Artificial Intelligence

Join our social channels to discuss machine learning megawatts

A N3XTCODER series

Implementing AI for Social Innovation

Welcome to the N3xtcoder series on Implementing AI for Social Innovation Series.

In this series we are looking at ways in which Artificial Intelligence can be used to benefit society and our planet - in particular the practical use of AI for Social Innovation projects.

In this instalment, we look at the energy usage of AI, its Carbon Footprint, and what can be done to optimise it.

AI technologies are advancing rapidly, not just in their capabilities, but also in their widespread adoption across different business sectors. But AI models also consume vastly more energy than conventional computing, so the need for optimising their energy usage is critical.

Questions that we would like to address:

- Why does AI need so much energy? We give a brief overview of why AI is so energy and resource intensive.

- What can AI developers do? We look at how developers can reduce energy usage when programming AI, and also when setting up AI systems.

- What can AI do? We explore how AI applications and tools can also help reduce energy consumption of AI and other digital systems.

- What can Governments and Regulators do? Finally, we look at what policy makers can do to help lower the energy consumption of AI.

Why does AI need so much energy?

The big leaps forward we have seen in AI models recently have often been a result of using ever larger sets of data on ever larger hardware infrastructures, all of which require more energy to train. In addition, the exponential growth in popularity of AI tools means that the runtime footprint, that is the ongoing energy required to run some models in production, is also stratospheric.

Most AI companies keep the precise amount of energy they use under wraps. This might be down to a lack of focus on the topic, or it could be a reluctance to disclose exact numbers that might invite unwanted attention. But it could also simply be down to the lack of a unified approach for the calculation and the difficulty of collecting all the relevant data.

When looking at the energy cost of AI we should also look at the carbon footprint of AI, which of course is closely linked to energy usage. The best approach to measuring the carbon emissions of a complex system such as an AI model is a life cycle assessment, that is measuring the carbon emissions from the materials and processes that go into making the system, to those carbon emissions from the system being used, and finally to those of the system being disposed of at the end of its life.

A standard carbon report measures "Scope 1, 2 and 3 Emissions”:

- Scope 1 emissions are those that come from running the system itself. For an AI system those are almost likely quite low, although some of the companies’ office and staff emissions would be covered here.

- Scope 2 are the carbon emissions directly related to the system’s energy consumption.

- Scope 3 emissions are all other emissions, and these include the emissions from raw materials and manufacturing the hardware, to the emissions of people actually using the system, to end of life disposal.

For an AI model, Scope 3 emissions are likely to be very high because they require many thousands of advanced GPUs, which are highly carbon intensive to produce. Unfortunately however, the major GPU manufacturers, such as Nvidia, do not release any carbon footprint data, and until they do, all Scope 3 carbon measurements of AI models are rough estimates.

That may explain why carbon footprint calculations for AI often start with the training of the model, although in some cases they also measure the energy footprint of the infrastructure that supports real-time inference, prediction, or other operations in production.

What is known about the energy consumption of the AI Training stage?

GPT 4.0 is assumed to have been trained on that data by using around 25,000 GPUs, which are the most advanced and energy intensive micro processors available. That training would require a constant supply of about 20 megawatts of electricity for up to 100 days. That is roughly the same as the annual emissions from 193 average gasoline-powered cars or from 2000 barrels of oil. (https://www.epa.gov/energy/greenhouse-gas-equivalencies-calculator#results)

What is the energy consumption of AI in production?

Once the model is fully trained, depending on the model, it probably uses nearly as much electricity generating responses from prompts, often called inference. Meta attributed approximately one third of their internal end-to-end machine learning (ML) carbon footprint to model inference, with the remainder produced by data management, storage, and training (https://arxiv.org/pdf/2111.00364.pdf); similarly, a 2022 study from Google attributed 60 percent of its ML energy use to inference, compared to 40 percent for training. (https://arxiv.org/pdf/2204.05149.pdf)

To put this in perspective, generating a single AI image is estimated to need as much energy as fully charging a smartphone. Meanwhile, text generation is much lower in its energy consumption. On average roughly 1/4000th of a smartphone charge is required for a query (or put in another way: 1000 queries requires 16 percent of the energy need for a full smartphone battery charge ) (https://arxiv.org/pdf/2311.16863.pdf)

Task specific AI models vs Multi-purpose AI models

AI can either be trained on a specific task or on a multitude of different tasks. The range of tasks a model can solve, its task diversity, has an impact on the energy consumption of the model. While models with diverse ability appear to be more effective in terms of their overall training footprint, in the inference stage the breadth of their ability requires on average more energy than those of task-specific models.

What is the current status of AI energy consumption?

While the world's energy production is only increasing very slowly - about 1 to 2 percent per year - the amount of energy used by machine learning and AI is increasing at an alarming rate. One study shows that at the current growth rate, AI would consume all available energy by around 2047. Plainly, this is not sustainable. Furthermore, this amount of energy use is not cheap, so reducing AI energy use is one of those happy sweet spots where cutting costs and cutting carbon go hand in hand. This is another strong incentive aside from sustainability to optimise AI energy usage.

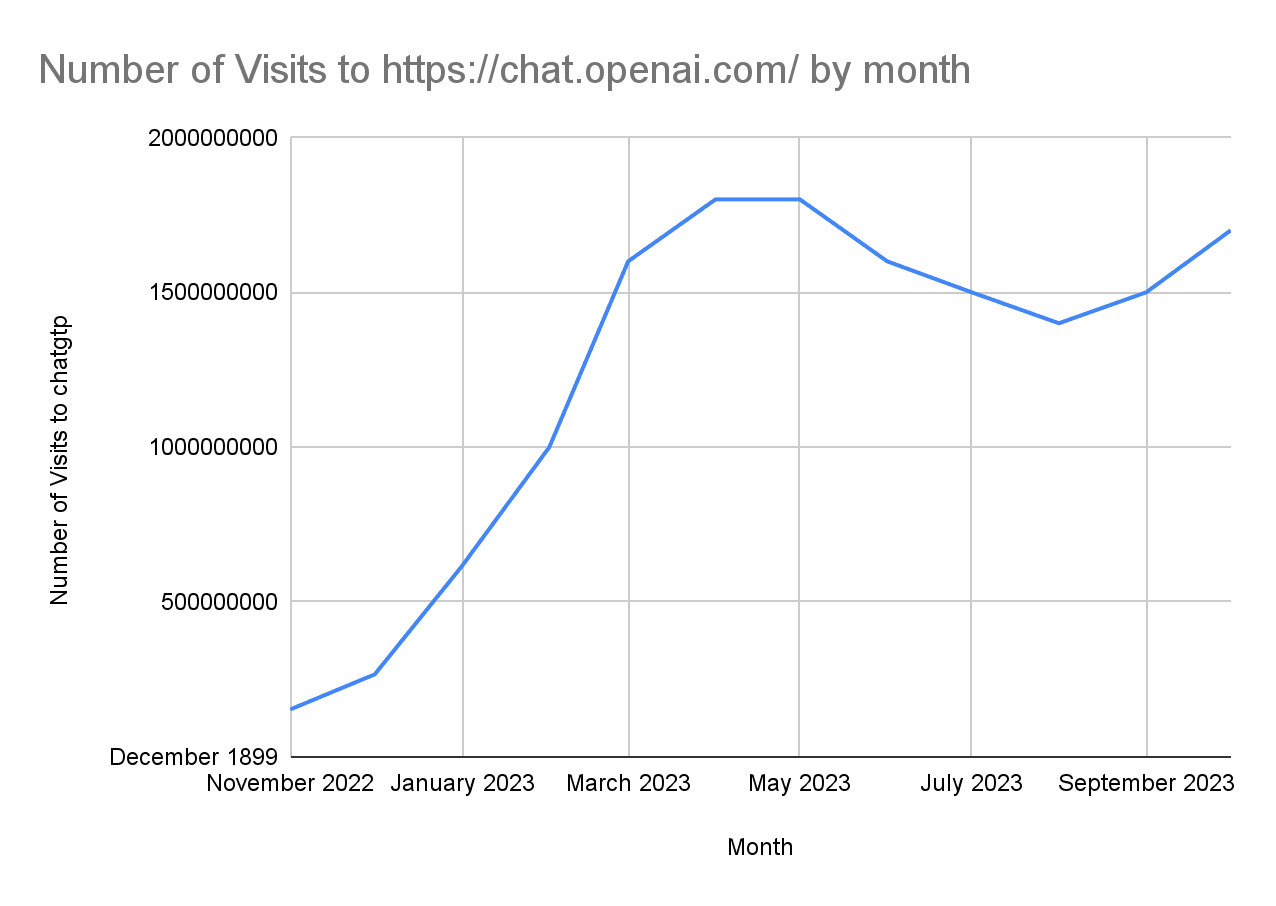

Source: Similarweb

Why is energy consumption going up so fast?

In short we can largely blame what in German is called the “Goldgräberstimmung”, or the “Gold Rush Effect", that is driving a lot of AI investment.

“The compute demand of neural networks is insatiable,” says Ian Bratt, fellow and senior director of technology at Arm. “The larger the network, the better the results, and the more problems you can solve. Energy usage is proportional to the size of the network. Therefore, energy efficient inference is absolutely essential to enable the adoption of more and more sophisticated neural networks and enhanced use-cases, such as real-time voice and vision applications.”

This “arms-race” for compute is not only bad for the planet, but also builds the barriers to participation ever higher. Small and medium-sized players simply cannot compete in the market with the resources available to the big fish, like OpenAI and Google.

How can we reign this situation back in? “We have forgotten that the driver of innovation for the last 100 years has been efficiency,” says Steve Teig, CEO of Perceive. “That is what drove Moore’s Law. We are now in an age of anti-efficiency.” (https://semiengineering.com/ai-power-consumption-exploding/)

Once AI is efficient at squeezing the most out of less computing power, hopefully the barriers to more breakthroughs will drop and the carbon footprint will drop with it. But will efficiency solve the issue? Possibly not if we consider Jevon’s paradox, also sometimes called the “Rebound Effect”. William Jevons was a 19th Century economist who noticed that when the efficiency of a machine or process was improved, the overall energy used often wasn’t reduced; instead energy use stayed the same and overall production went up. We can see this effect everywhere, not least in the way that data centres, while getting more energy efficient, end up storing more and more data and requiring even more energy.

The lesson of Jevons’ Paradox is that if we want to reduce overall energy use, we have to think more holistically about a system, and not simply focus on efficiency.

If that became the goal, a truly energy-efficient AI ecosystem might not only save more energy, but foster broader innovation by making AI more accessible. Lowering the energy footprint of AI usage can also make AI technologies more widely accessible, for example by enabling smaller enterprises and research initiatives to participate in the development and deployment of AI solutions. That may in turn spur a more diverse range of innovations.

Read on in part 2: What can AI and AI developers do to reduce the carbon footprint of AI